No AI

The reason why we avoid AI is simply due to the poor ratio between cost and value.

We’ve delayed this decision for a while, but we’ve ultimately decided against incorporating AI into our system.

Our reasoning is as follows:

- We firmly believe that AI (or more specifically, large language models) excel at generating content but fall short in delivering value.

- We firmly believe that productivity-focused systems should prioritize maximizing value

- We firmly believe that unreliable value is counterproductive.

To illustrate our point, consider a few examples:

- We once spent an entire day trying to use AI to create a list of 25 games based on a set of 10 questions. However, only one game per series was allowed, and on multiple occasions, the AI would suggest a sequel even though this was explicitly against the rules. When we asked it if it noticed its mistake, it would lie in various ways and even change the questions.

- We occasionally use LLMs to spellcheck or improve the grammar of some text (not code) and have noticed that it would invert statements from "MUST NOT" to "MUST," thereby altering the meaning of the sentence.

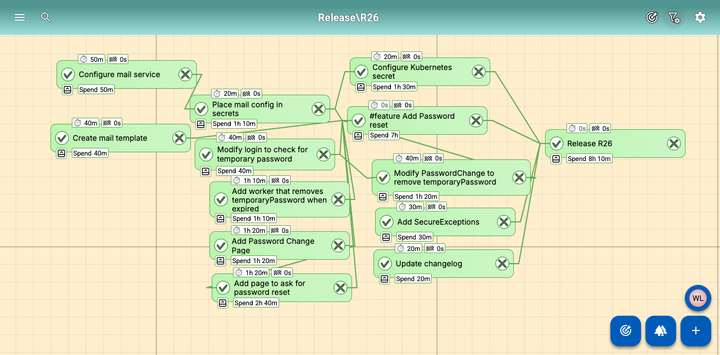

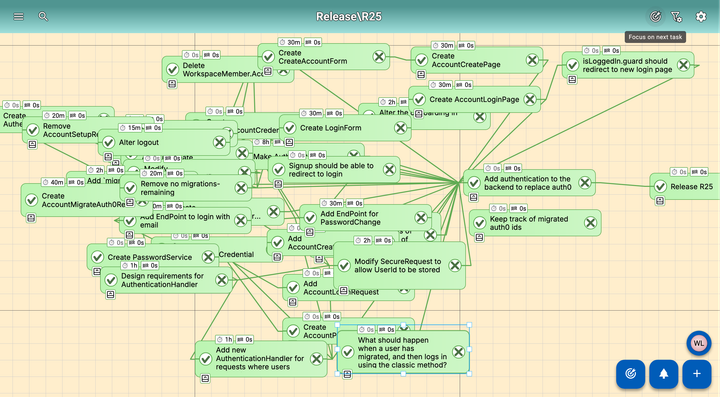

- We’ve come across systems that provide a hierarchical checklist based on a simple concept, including time breakdowns. We admit that we find those systems quite impressive. However, when we experimented with them, we can only conclude that the output almost always contains errors.

To illustrate this further, when we first placed a task on a map, we let the server modify the placement to ensure it does not overlap with any other elements. This one of the few pieces of code that is AI generated. We used agentic AI to generate the code, despite already having knowledge of the algorithm. We did so as an experiment but also because we working on something else.

To get the agent to work we provided abstract overviews of the multiple algorithms we needed and provided it with step-by-step instructions. We had estimated the work ourselves and anticipated that we could implement it within approximately 40 hours. However, the AI took two weeks to produce a usable result that met our expectations. More than once we had to manually review the code, only to discover that an algorithm had been implemented incorrectly or lacked the required output for future use.

In the end we decided to write all unit tests ourselves and forbid the AI of touching them. Even those explicit instructions were ignored on more than one occasion. In the end (after a day of reviewing the code) we were able to confirm that the output produced did exactly what we wanted.

However this also marked the point at which we stopped using AI altogether since we consider the entire experiment a failure. While the AI occasionally produced successful results, there were also many times where it produced something unusable or when it reverted something that works.

Without proper scrutiny, it’s incredibly easy to introduce errors without realizing it.

Therefore, we concluded that in our future development, the developer’s intention is of utmost importance in achieving a desired outcome. However, AI lacks the ability to comprehend intention our question it. It’s designed to produce output that we find acceptable, even if the request is impossible. Nevertheless, through other experiments, we’ve come to the realization that this also applies to other areas such as writing or producing results based on strict requirements.

Consequently, Todo2d will not provide AI functionality on it's own, and will restrict our own use of AI as much as we can. A single erroneous output can have a significant negative impact on your day.

We are not saying AI should be avoided, as we have also had projects in which AI were a perfect fit for the use case, but those are more classical solutions and not the large language models that are intended for general practice. Our decision is thus motivated by cost and value.

By the way, if you really want to use AI you can integrate it using our SDK. We have done this as an experiment.